Bad A/B tests can lead to bad product decisions. Here’s how two experts decide when to test, when to trust your gut, and how to make data-driven decisions that actually work.

I recently spoke with Oji Udezue (author of “Building Rocketships” and former CPO at Typeform & Calendly) and Eric Metelka (Head of Product at Eppo) about how to run the most effective A/B testing program possible.

Here’s how you can run the best A/B tests possible to drive your business forward. 👇

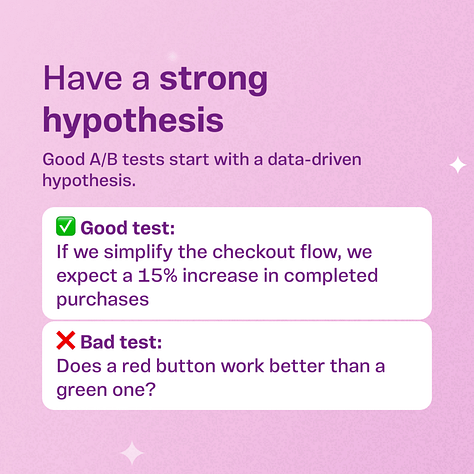

1. Have a good hypothesis

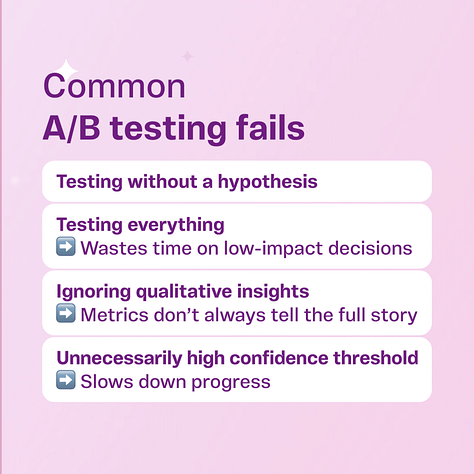

Testing isn’t “does a red button work better than a green button?” That’s just throwing spaghetti against the wall.

“Having a good hypothesis is always the start,” says Eric. “Have a belief based on quantitative or qualitative data that says, ‘if I make this change, these users are going to do this thing.’”

And understand how this change ties to your North Star goal.

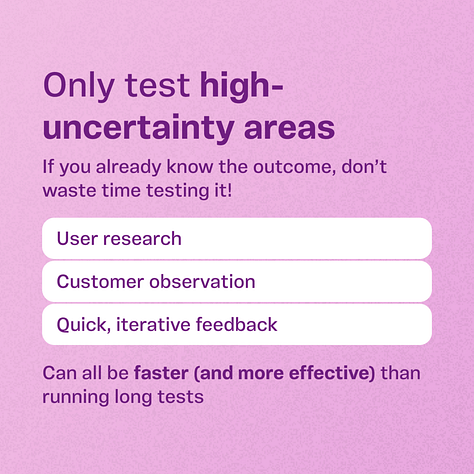

2. Only test high-uncertainty areas

Not everything needs an A/B test. Eric recommends, “A/B test what has high uncertainty. If it's not high uncertainty, just build it.”

Oji adds, “A/B tests take time. I’ve done tests that take a month to reach statistical significance. A month before I can make a decision? Are you kidding me?”

Consumer tech companies like Facebook can test at scale and hit significance in days. But for B2B SaaS, long test cycles are impractical.

Consider alternatives like user research, observation, and iterative feedback.

From Oji: “Talk to customers, get into their workflows, observe them, and bring them along the journey.”

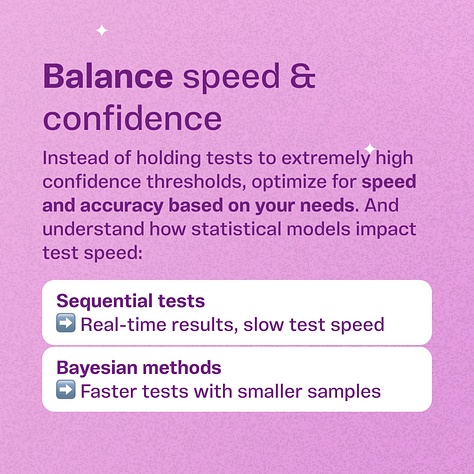

3. Balance speed and confidence

Oji flagged another common mistake: Holding tests to an unnecessarily high confidence threshold.

Eric reinforced this, pointing out that different statistical models impact test speed:

“Most companies use sequential testing, which lets you check results in real time, but that slows down test speed. Bayesian methods can actually help you run tests faster with smaller samples.”

Instead of one large, time-consuming test, teams can optimize for speed by running multiple smaller tests with slightly lower confidence levels to iterate quickly.

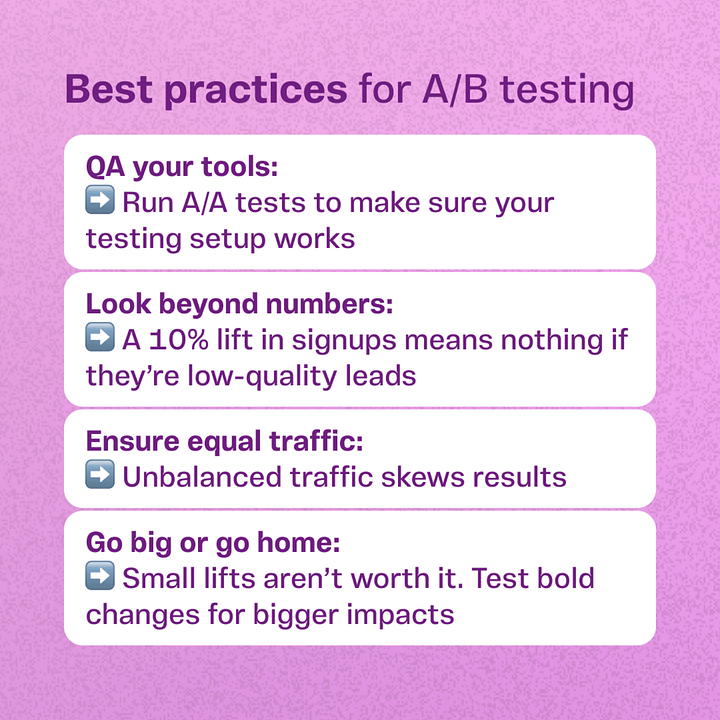

⚡️If you do decide to run an A/B test, here are some more best practices:

QA your tools: Run A/A tests to ensure your testing tools work properly. You’d be surprised how often a supposed “variant” wins with >95% confidence, despite being identical to the control.

Look beyond the numbers: Metrics don’t tell the full story. Are you trading 10 high-value leads for 15 low-quality ones? Optimize for results, not just mid-funnel gains.

Ensure equal traffic (or understand ratios): Don’t just look at raw conversions. Unbalanced traffic may mean more conversions simply because that variant had more people to convert.

Check out both full conversations from their appearances on LaunchPod:

📺 Oji Udezue: https://tinyurl.com/hzy35t54

📺 Eric Metelka: https://tinyurl.com/mtnsz4hr

What’s your team’s go-to strategy for validating ideas quickly?👇